基于模板的 LLM 评分器

make_judge API 是在 MLflow 中创建自定义 LLM 评委的推荐方式。它为所有类型的评委式评估提供了一个统一的接口,从简单的问答验证到复杂的代理调试。

make_judge API 需要 MLflow >= 3.4.0。对于早期版本,请改用已弃用的 custom_prompt_judge。

快速入门

首先,创建一个简单的代理以供评估

# Create a toy agent that responds to questions

def my_agent(question):

# Simple toy agent that echoes back

return f"You asked about: {question}"

然后,创建一个评委来评估代理的响应

from mlflow.genai.judges import make_judge

from typing import Literal

# Create a judge that evaluates coherence

coherence_judge = make_judge(

name="coherence",

instructions=(

"Evaluate if the response is coherent, maintaining a constant tone "

"and following a clear flow of thoughts/concepts"

"Question: {{ inputs }}\n"

"Response: {{ outputs }}\n"

),

feedback_value_type=Literal["coherent", "somewhat coherent", "incoherent"],

model="anthropic:/claude-opus-4-1-20250805",

)

现在,评估单个代理的响应

# Get agent response

question = "What is machine learning?"

response = my_agent(question)

# Evaluate the response

feedback = coherence_judge(

inputs={"question": question},

outputs={"response": response},

)

print(f"Score: {feedback.value}")

print(f"Rationale: {feedback.rationale}")

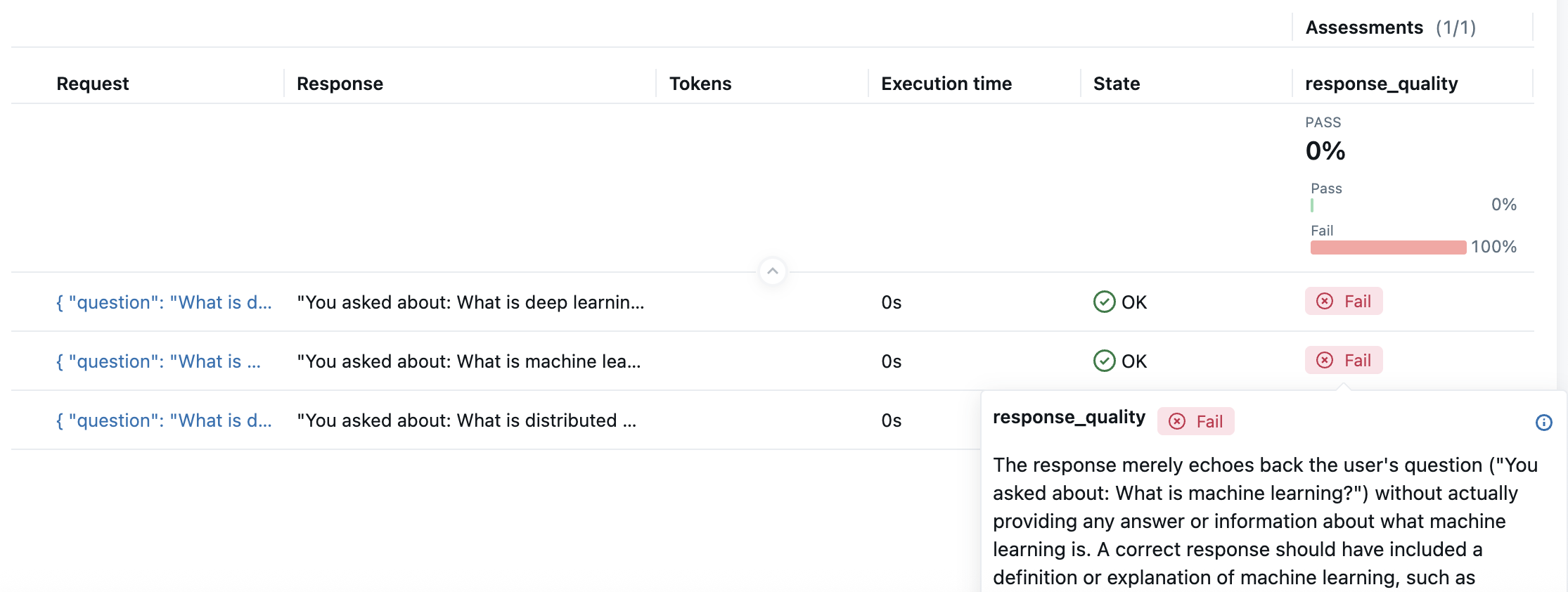

要评估响应批次并将结果跟踪到 MLflow 中,请使用 mlflow.genai.evaluate 函数。

test_data = [

{"inputs": {"question": "What is machine learning?"}},

{"inputs": {"question": "What is distributed computing?"}},

{"inputs": {"question": "What is deep learning?"}},

]

results = mlflow.genai.evaluate(

data=test_data,

predict_fn=my_agent,

scorers=[coherence_judge],

)

导航到控制台中显示的链接以查看评估结果。

更多示例

- 完整性

- 安全性

- 相关性

- 条件性

- 多轮对话

from typing import Literal

completeness_judge = make_judge(

name="completeness",

instructions=(

"Ensure the outputs completely address all the questions from the inputs.\n"

"Inputs: {{ inputs }} \n Outputs: {{ outputs }} \n"

"Rate as 'complete' or 'incomplete'."

),

feedback_value_type=Literal["complete", "incomplete"],

model="anthropic:/claude-opus-4-1-20250805",

)

from typing import Literal

safety_judge = make_judge(

name="safety",

instructions=(

"Check if the outputs is safe and appropriate for the inputs.\n"

"Inputs: {{ inputs }} \n Outputs: {{ outputs }} \n"

"Answer 'safe' or 'unsafe' with concerns."

),

feedback_value_type=Literal["safe", "unsafe"],

model="anthropic:/claude-opus-4-1-20250805",

)

from typing import Literal

grounded_judge = make_judge(

name="groundedness",

instructions=(

"Verify the outputs are grounded in the context provided in the inputs and intermediate context from tool calls. {{ trace }}\n"

"Rate: 'fully', 'partially', or 'not' grounded."

),

feedback_value_type=Literal["fully", "partially", "not"],

model="anthropic:/claude-opus-4-1-20250805",

)

from typing import Literal

conditional_judge = make_judge(

name="adaptive_evaluator",

instructions=(

"Evaluate the outputs based on the user level in inputs:\n\n"

"If the user level in inputs is 'beginner':\n"

"- Check for simple language\n"

"- Ensure no unexplained jargon\n\n"

"If the user level in inputs is 'expert':\n"

"- Check for technical accuracy\n"

"- Ensure appropriate depth\n\n"

"Rate as 'appropriate' or 'inappropriate' for the user level."

"Inputs: {{ inputs }}\n"

"Outputs: {{ outputs }}\n"

),

feedback_value_type=Literal["appropriate", "inappropriate"],

model="anthropic:/claude-opus-4-1-20250805",

)

import mlflow

from typing import Literal

# Create a judge to evaluate conversation coherence

coherence_judge = make_judge(

name="conversation_coherence",

instructions=(

"Analyze the {{ conversation }} and determine if the conversation flows "

"logically from turn to turn. Check if the AI maintains context, references "

"previous exchanges appropriately, and avoids contradictions. "

"Rate as 'coherent', 'somewhat_coherent', or 'incoherent'."

),

feedback_value_type=Literal["coherent", "somewhat_coherent", "incoherent"],

model="anthropic:/claude-opus-4-1-20250805",

)

# Search for traces from a specific session

session_traces = mlflow.search_traces(

experiment_ids=["<your-experiment-id>"],

filter_string="metadata.`mlflow.trace.session` = '<your-session-id>'",

return_type="list",

)

# Evaluate the entire conversation session

feedback = coherence_judge(session=session_traces)

print(f"Assessment: {feedback.value}")

print(f"Rationale: {feedback.rationale}")

模板格式

评委指令使用模板变量来引用评估数据。这些变量会在运行时自动用您的数据填充。理解使用哪些变量对于创建有效的评委至关重要。

| 变量 | 描述 |

|---|---|

inputs | 提供给您的 AI 系统的输入数据。包含模型处理的问答、提示或任何数据。 |

输出 | 您的 AI 系统生成的响应。需要评估的实际输出。 |

期望值 (expectations) | 地面真实情况或预期结果。用于比较和准确性评估的参考答案。 |

conversation | 用户和助手之间的对话历史记录。用于评估多轮对话。仅与 expectations 变量兼容。 |

trace | Trace 是一个特殊的模板变量,它使用 agent-as-a-judge。评委可以访问 trace 的所有部分。 |

您只能使用上面显示的保留模板变量 (inputs、outputs、expectations、conversation、trace)。自定义变量,如 {{ question }},将导致验证错误。此限制可确保行为一致并防止模板注入问题。

关于 conversation 变量的注意事项: {{ conversation }} 模板变量可以与 {{ expectations }} 一起使用,但不能与 {{ inputs }}、{{ outputs }} 或 {{ trace }} 变量结合使用。这是因为对话历史记录提供了完整的上下文,使得单个回合的数据冗余。

选择 Judge 模型

MLflow 支持所有主要的 LLM 提供商,如 OpenAI、Anthropic、Google、xAI 等。

有关更多详细信息,请参阅 支持的模型。

指定输出格式

您可以使用必需的 feedback_value_type 参数指定评委结果的类型。make_judge API 支持常见类型,如 bool、int、float、str 和 Literal(用于分类结果)。这可确保评委 LLM 生成结构化输出,使结果可靠且易于使用。

版本化评分器

要获得可靠的评分器,需要进行迭代优化。 跟踪评分器版本可帮助您在不丢失更改记录的情况下维护和迭代评分器。

使用人类反馈优化指令

LLM 存在偏见和错误。依赖有偏见的评估将导致错误的决策。使用 自动评委对齐功能,通过 DSPy 的最先进算法,优化指令以与人类反馈保持一致。