Tracing AG2🤖

AG2 是一个用于构建和协调 AI 代理交互的开源框架。

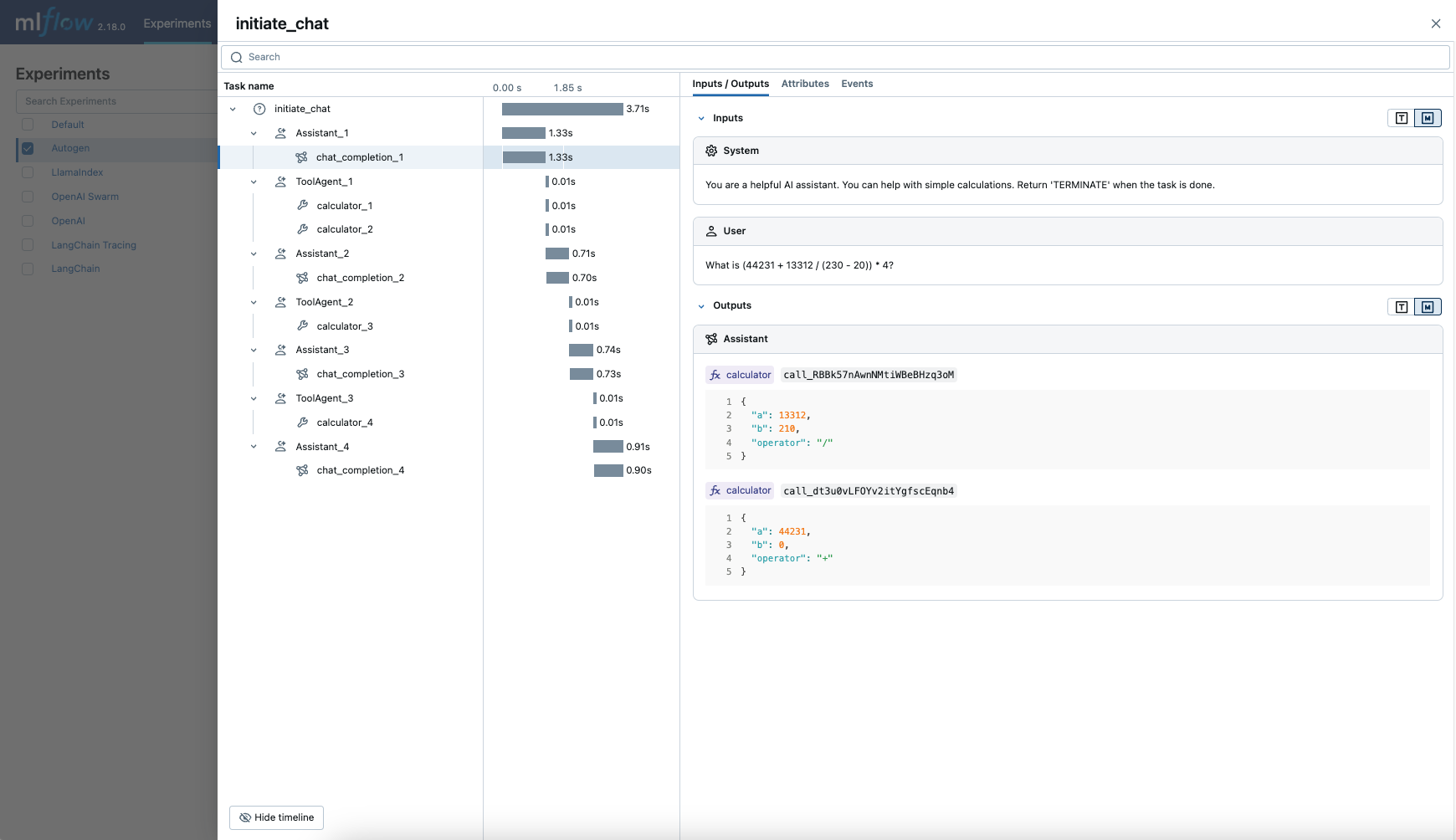

MLflow Tracing 为 AG2(一个开源的多代理框架)提供了自动跟踪功能。通过调用 mlflow.ag2.autolog() 函数为 AG2 启用自动跟踪,MLflow 将在代理执行时捕获嵌套跟踪并将其记录到活动 MLflow Experiment 中。请注意,由于 AG2 构建在 AutoGen 0.2 的基础上,因此在您使用 AutoGen 0.2 时可以使用此集成。

python

import mlflow

mlflow.ag2.autolog()

MLflow 捕获多代理执行的以下信息

- 在不同轮次中调用的代理

- 代理之间传递的消息

- 每个代理在特定轮次中进行的 LLM 和工具调用,按代理和轮次组织

- 延迟

- 如果抛出任何异常

基本示例

python

import os

from typing import Annotated, Literal

from autogen import ConversableAgent

import mlflow

# Turn on auto tracing for AG2

mlflow.ag2.autolog()

# Optional: Set a tracking URI and an experiment

mlflow.set_tracking_uri("https://:5000")

mlflow.set_experiment("AG2")

# Define a simple multi-agent workflow using AG2

config_list = [

{

"model": "gpt-4o-mini",

# Please set your OpenAI API Key to the OPENAI_API_KEY env var before running this example

"api_key": os.environ.get("OPENAI_API_KEY"),

}

]

Operator = Literal["+", "-", "*", "/"]

def calculator(a: int, b: int, operator: Annotated[Operator, "operator"]) -> int:

if operator == "+":

return a + b

elif operator == "-":

return a - b

elif operator == "*":

return a * b

elif operator == "/":

return int(a / b)

else:

raise ValueError("Invalid operator")

# First define the assistant agent that suggests tool calls.

assistant = ConversableAgent(

name="Assistant",

system_message="You are a helpful AI assistant. "

"You can help with simple calculations. "

"Return 'TERMINATE' when the task is done.",

llm_config={"config_list": config_list},

)

# The user proxy agent is used for interacting with the assistant agent

# and executes tool calls.

user_proxy = ConversableAgent(

name="Tool Agent",

llm_config=False,

is_termination_msg=lambda msg: msg.get("content") is not None

and "TERMINATE" in msg["content"],

human_input_mode="NEVER",

)

# Register the tool signature with the assistant agent.

assistant.register_for_llm(name="calculator", description="A simple calculator")(

calculator

)

user_proxy.register_for_execution(name="calculator")(calculator)

response = user_proxy.initiate_chat(

assistant, message="What is (44231 + 13312 / (230 - 20)) * 4?"

)

Token 用量

MLflow >= 3.2.0 支持 AG2 的 token 使用量跟踪。每个 LLM 调用的 token 使用量将记录在 mlflow.chat.tokenUsage 属性中。整个跟踪的总 token 使用量可在跟踪信息对象的 token_usage 字段中找到。

python

import json

import mlflow

mlflow.ag2.autolog()

# Register and run the tool signature with the assistant agent which is defined in above section.

assistant.register_for_llm(name="calculator", description="A simple calculator")(

calculator

)

user_proxy.register_for_execution(name="calculator")(calculator)

response = user_proxy.initiate_chat(

assistant, message="What is (44231 + 13312 / (230 - 20)) * 4?"

)

# Get the trace object just created

last_trace_id = mlflow.get_last_active_trace_id()

trace = mlflow.get_trace(trace_id=last_trace_id)

# Print the token usage

total_usage = trace.info.token_usage

print("== Total token usage: ==")

print(f" Input tokens: {total_usage['input_tokens']}")

print(f" Output tokens: {total_usage['output_tokens']}")

print(f" Total tokens: {total_usage['total_tokens']}")

# Print the token usage for each LLM call

print("\n== Detailed usage for each LLM call: ==")

for span in trace.data.spans:

if usage := span.get_attribute("mlflow.chat.tokenUsage"):

print(f"{span.name}:")

print(f" Input tokens: {usage['input_tokens']}")

print(f" Output tokens: {usage['output_tokens']}")

print(f" Total tokens: {usage['total_tokens']}")

bash

== Total token usage: ==

Input tokens: 1569

Output tokens: 229

Total tokens: 1798

== Detailed usage for each LLM call: ==

chat_completion_1:

Input tokens: 110

Output tokens: 61

Total tokens: 171

chat_completion_2:

Input tokens: 191

Output tokens: 61

Total tokens: 252

chat_completion_3:

Input tokens: 269

Output tokens: 24

Total tokens: 293

chat_completion_4:

Input tokens: 302

Output tokens: 23

Total tokens: 325

chat_completion_5:

Input tokens: 333

Output tokens: 22

Total tokens: 355

chat_completion_6:

Input tokens: 364

Output tokens: 38

Total tokens: 402

禁用自动跟踪

可以通过调用 mlflow.ag2.autolog(disable=True) 或 mlflow.autolog(disable=True) 来全局禁用 AG2 的自动跟踪。