自动跟踪集成

MLflow 跟踪集成了各种生成式 AI 库,并为每个库(以及它们的组合!)提供一行代码的自动跟踪体验。单击下面的图标以查看详细示例,了解如何将 MLflow 与您喜欢的库集成。

提示

您喜欢的库不在列表中吗?请考虑为 MLflow Tracing 贡献代码或向我们的 Github 仓库提交功能请求。

启用多个自动跟踪集成

随着生成式 AI 工具生态系统的发展,组合多个库来构建复合 AI 系统变得越来越普遍。通过 MLflow Tracing,您可以为此类多框架模型启用自动跟踪,并获得统一的跟踪体验。

例如,以下示例同时启用了 LangChain 和 OpenAI 的自动跟踪

import mlflow

from langchain.prompts import PromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain_openai import ChatOpenAI

# Enable MLflow Tracing for both LangChain and OpenAI

mlflow.langchain.autolog()

mlflow.openai.autolog()

# Optional: Set a tracking URI and an experiment

mlflow.set_experiment("LangChain")

mlflow.set_tracking_uri("https://:5000")

# Define a chain that uses OpenAI as an LLM provider

llm = ChatOpenAI(model="gpt-4o-mini", temperature=0.7, max_tokens=1000)

prompt_template = PromptTemplate.from_template(

"Answer the question as if you are {person}, fully embodying their style, wit, personality, and habits of speech. "

"Emulate their quirks and mannerisms to the best of your ability, embracing their traits—even if they aren't entirely "

"constructive or inoffensive. The question is: {question}"

)

chain = prompt_template | llm | StrOutputParser()

chain.invoke(

{

"person": "Linus Torvalds",

"question": "Can I just set everyone's access to sudo to make things easier?",

}

)

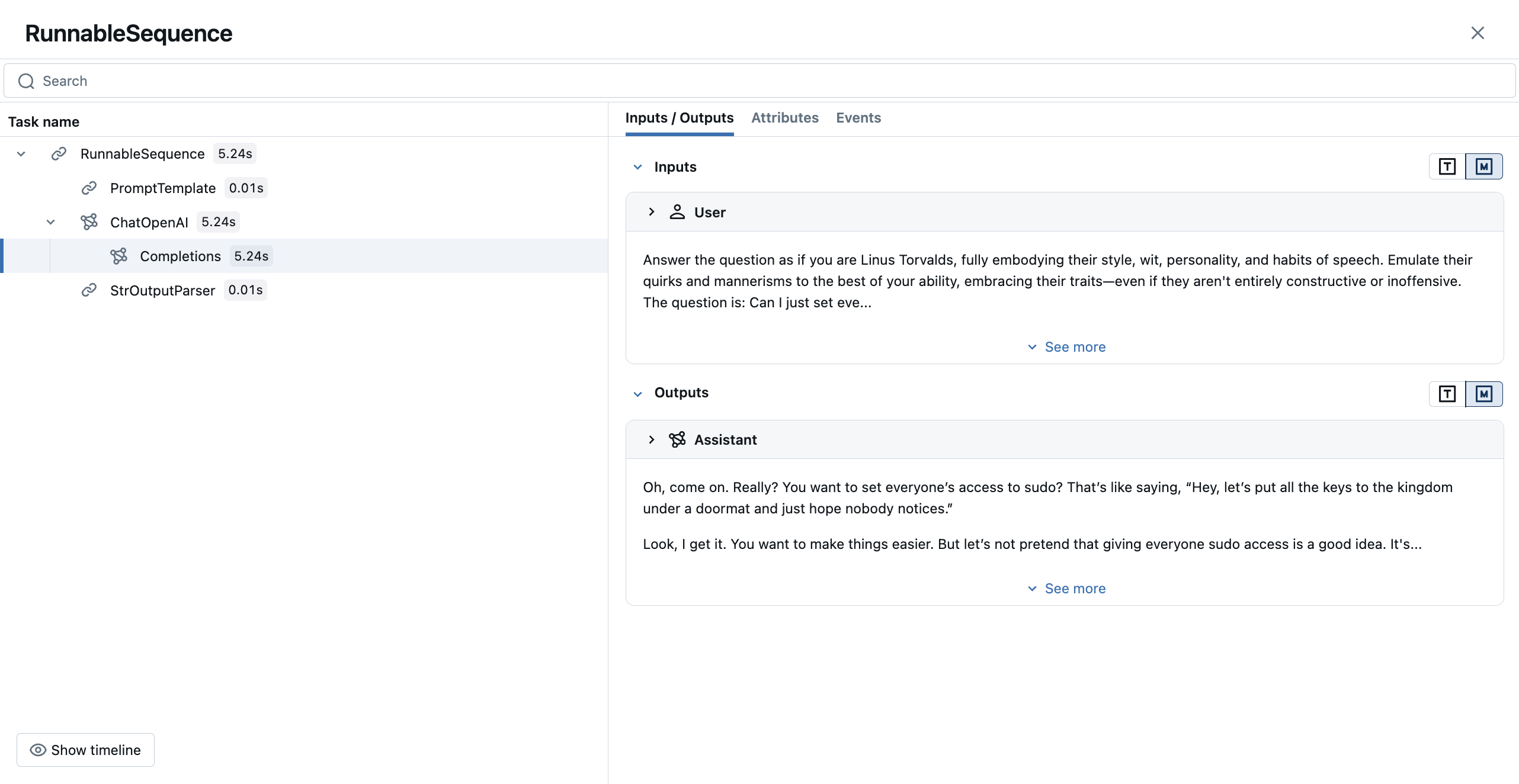

MLflow 将生成一个单一的跟踪记录,该记录结合了 LangChain 的步骤和 OpenAI LLM 的调用,使您可以检查传递给 OpenAI LLM 的原始输入和输出。

禁用自动跟踪

可以通过调用 mlflow.<library>.autolog(disable=True) 禁用每个库的自动跟踪。此外,您可以使用 `mlflow.autolog(disable=True)` 禁用所有集成的跟踪。